因为公司内部提供的大模型接口与常规的不太一致,市面上常见的 MCP Client 无法直接对接,这里根据 MCP github 上的 MCP chat client example 代码

( https://github.com/modelcontextprotocol/python-sdk/tree/main/examples/clients/simple-chatbot ),改写了一下,主要是支持自定义的大模型 API。

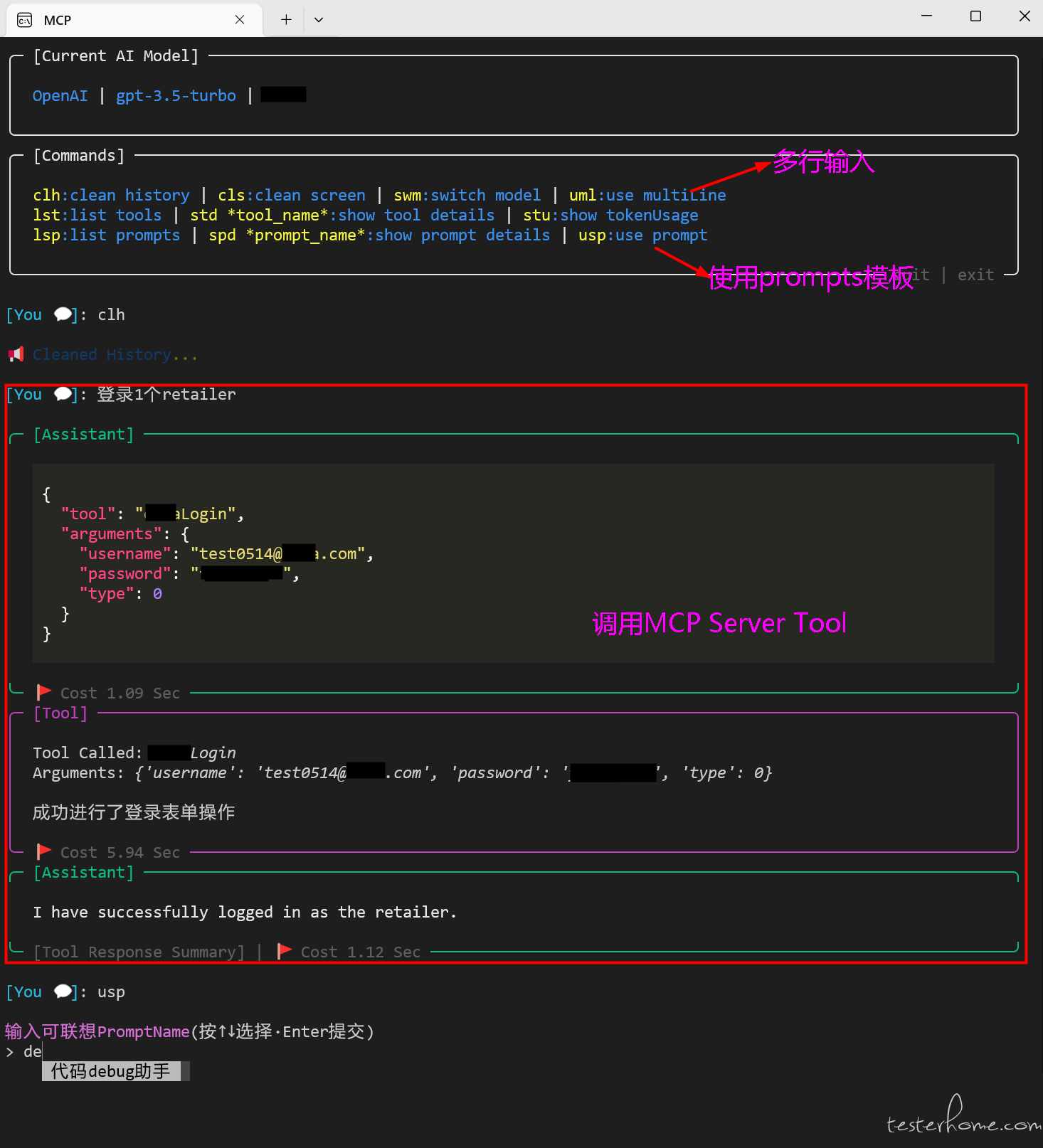

另外利用 rich、prompt_toolkit 美化了控制台的界面,不再是日志格式,并添加了更多 MCP 特性及聊天器必备命令。

增加命令:清理对话历史、切换大模型、查看 tool 列表及明细、查看 prompts 列表及明细、使用 prompt

增加了提示提示语意义不明,匹配多个,用户可选择执行功能(增加在了 system 的 prompts 中)

实现了提示语含多个 tool 连续调用处理 (取决于大模型能力)

[1] 配置:servers_config.json,案例

{

"mcpServers": {

"sqlite": {

"command": "uvx",

"args": ["mcp-server-sqlite", "--db-path", "./test.db"],

"disabled": false

},

"puppeteer": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-puppeteer"],

"disabled": false

}

}

}

[2] 配置环境变量文件: .env,案例

OPEN_API_KEY="sk-proj-f********"

OPEN_PROXY="http://192.168.1.8:8080"

DEEPSEEK_API_KEY="sk-****"

DEEPSEEK_PROXY=

CUSTOM_OPEN_ID="xxxxxxxx"

CUSTOM_PROXY=

NO_PROXY="127.0.0.1,localhost"

依赖:python>=3.13

mcp==1.9.0

python-dateutil==2.9.0.post0

python-dotenv==1.1.0

python-multipart==0.0.20

pytz==2025.2

rich==14.0.0

shellingham==1.5.4

six==1.17.0

sniffio==1.3.1

sse-starlette==2.3.5

starlette==0.46.2

typer==0.15.4

typing-inspection==0.4.1

typing_extensions==4.13.2

tzdata==2025.2

urllib3==2.4.0

uvicorn==0.34.2

wcwidth==0.2.13

prompt_toolkit==3.0.51

「All right reserved, any unauthorized reproduction or transfer is prohibitted」

No Reply at the moment.