这里列一下用到的缩略语和解释:

section0

stack

stack - The stack is the memory set aside as scratch space for a thread of execution. When a

function is called, a block is reserved on the top of the stack for local variables and some bookkeeping data. When that function returns, the block becomes unused and can be used the next

time a function is called. The stack is always reserved in a LIFO (last in first out) order; the most

recently reserved block is always the next block to be freed.

heap

• heap - The heap is memory set aside for dynamic allocation. Unlike the stack, there is no enforced

pattern to the allocation and deallocation of blocks from the heap; you can allocate a block at any

time and free it at any time.

process

• process - A process is an instance of a computer program that is being executed, typically with

restricted rights. It consists of an address space and one or more threads of control. It is the main

abstraction for protection provided by the operating system kernel.

address space

• address space - The address space for a process is the set of memory addresses that it can use.

The memory corresponding to each process’ address space is private and cannot be accessed by

other processes, unless it is shared.

• C - A high level programming language. In order to run it, C will be compiled to low level machine

instructions like x86 64 or RISC-V. Note that it is often times easier to express high level ideas in

C, but C cannot be used to express many details (such as register allocation).

• x86 - A very popular family of instruction sets (which includes i386 and x86 64). Unlike MIPS

or RISC-V, x86 is primarily based on CISC (Complex Instruction Set Computing) architecture.

Virtually all servers, desktops, and most laptops (with Intel or AMD) CPUs natively execute x86.

section1

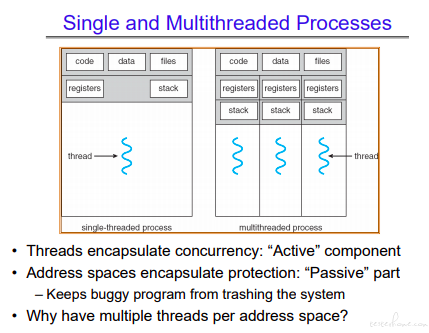

这个图请牢记,heap 的空间线程间也可以直接共享。

thread

• thread - A thread is a single execution sequence that can be managed independently by the operating system. (See A&D, 4.2)

• isolation - Isolating (separating) applications from one another so that a potentially misbehaving

application cannot corrupt other applications or the operating system.

内核与用户态双模式

• dual-mode operation - Dual-mode operation refers to hardware support for multiple privilege

levels: a privileged level (called supervisor-mode or kernel-mode) that provides unrestricted access

to the hardware, and a restricted level (called user-mode) that executes code with restricted rights.

特权指令(只可以内核用)

• privileged instruction - Instruction available in kernel mode but not in user mode. Two examples of privileged instructions are the instructions to enable and disable interrupts on the processor.

If user-level code could disable interrupts, it would guarantee that the user-level process could run

on a hardware thread for as long as it wanted.

非特权指令

• unprivileged instruction - Instruction available in both user mode and kernel mode. An example of an unprivileged instruction is the add instruction or the instructions that read or write to

memory. User-level processes are allowed to perform these standard operations that all computer

programs need in order to run.

创建进程

• fork - A C function that calls the fork syscall that creates a new process by duplicating the calling

process. The new process, referred to as the child, is an exact duplicate of the calling process

(except for a few details, read more in the man page). Both the newly created process and the

parent process return from the call to fork. On success, the PID of the child process is returned in

the parent, and 0 is returned in the child. On failure, -1 is returned in the parent, no child process

is created.

等待系统返回

• wait - A class of C functions that call syscalls that are used to wait for state changes in a child

of the calling process, and obtain information about the child whose state has changed. A state

change is considered to be: the child terminated; the child was stopped by a signal; or the child

was resumed by a signal

进程返回码

• exit code - The exit status or return code of a process is a 1 byte number passed from a child

process (or callee) to a parent process (or caller) when it has finished executing a specific procedure

or delegated task

exec

• exec - The exec() family of functions replaces the current process image with a new process image.

The initial argument for these functions is the name of a file that is to be executed.

POSIX 线程

• pthreads - A POSIX-compliant (standard specified by IEEE) implementation of threads. A

pthread_t is usually just an alias for “unsigned long int”.

pthread create

• pthread create - Creates and immediately starts a child thread running in the same address space

of the thread that spawned it. The child executes starting from the function specified. Internally,

2

CS 162 Fall 2019 Section 1: OS Concepts, Processes, Threads

this is implemented by calling the clone syscall.

int pthread_create(pthread_t *thread, const pthread_attr_t *attr,

void *(*start_routine) (void *), void *arg);

线程入队

• pthread join - Waits for a specific thread to terminate, similar to waitpid(3).

/* On success, pthread_join() returns 0; on error, it returns an error number. */

int pthread_join(pthread_t thread, void **retval);

临界区,只允许一个线程执行

• critical section - A section of code that accesses a shared resource and must not be concurrently

run by more than a single thread.

竞争关系

• race condition - A situation whose outcome is dependent on the sequence of execution of multiple

threads running simultaneously.

lcok

• lock - Synchronization primitives that provide mutual exclusion. Threads may acquire or release

a lock. Only one thread may hold a lock at a time. If a thread attempts to acquire a lock that

is held by some other thread, it will block at that line of code until the lock is released and it

successfully acquires it. Implementations can vary.

section2

信号量

• semaphore - Synchronization primitives that are used to control access to a shared variable in a

more general way than locks. A semaphore is simply an integer with restrictions on how it can be

modified:

– When a semaphore is initialized, the integer is set to a specified starting value.

– A thread can call down() (also known as P) to attempt to decrement the integer. If the

integer is zero, the thread will block until it is positive, and then unblock and decrement the

integer.

– A thread can call up() (also known as V) to increment the integer, which will always succeed.

Unlike locks, semaphores have no concept of ” ownership”, and any thread can call down() or up()

on any semaphore at any time.

系统调用

• system call - In computing, a system call is how a program requests a service from an operating system’s kernel. This may include hardware-related services, creation and execution of new

processes, and communication with integral kernel services such as process scheduling.

信号,软中断

• signals - A signal is a software interrupt, a way to communicate information to a process about

the state of other processes, the operating system, and the hardware. A signal is an interrupt in

the sense that it can change the flow of the program —when a signal is delivered to a process, the

process will stop what its doing, either handle or ignore the signal, or in some cases terminate,

depending on the signal.

• int signal(int signum, void (*handler)(int)) - signal() is the primary system call for signal

handling, which given a signal and function, will execute the function whenever the signal is

delivered. This function is called the signal handler because it handles the signal.

文件描述

• file descriptors - File descriptors are an index into a file-descriptor table stored by the kernel.

The kernel creates a file-descriptor in response to an open call and associates the file-descriptor

with some abstraction of an underlying file-like object; be that an actual hardware device, or a

file-system or something else entirely. Using file descriptors, a process’s read or write calls are

routed to the correct place by the kernel. When your program starts you have 3 file descriptors.

File Descriptor File

0 stdin

1 stdout

2 stderr

文件打开

• int open(const char *path, int flags) - open is a system call that is used to open a new file

and obtain its file descriptor. Initially the offset is 0.

2

CS 162 Fall 2019 Section 2: Synchronization, Wait and Exit, & Files

文件读

• size t read(int fd, void *buf, size t count) - read is a system call used to read count bytes

of data into a buffer starting from the file offset. The file offset is incremented by the number of

bytes read.

文件写

• size t write(int fd, const void *buf, size t count) - write is a system call that is used to write

up to count bytes of data from a buffer to the file offset position. The file offset is incremented by

the number of bytes written.

文件偏移移动

• size t lseek(int fd, off t offset, int whence) - lseek is a system call that allows you to move

the offset of a file. There are three options for whence

– SEEK SET - The offset is set to offset.

– SEEK CUR - The offset is set to current offset + offset

– SEEK END - The offset is set to the size of the file + offset

复制文件描述符

• int dup(int oldfd) - creates an alias for the provided file descriptor and returns the new fd value.

dup always uses the smallest available file descriptor. Thus, if we called dup first thing in our

program, it would use file descriptor 3 (0, 1, and 2 are already signed to stdin, stdout, stderr). The

old and new file descriptors refer to the same open file description and may be used interchangeably.

• int dup2(int oldfd, int newfd) - dup2 is a system call similar to dup. It duplicates the oldfd

file descriptor, this time using newfd instead of the lowest available number. If newfd was open,

it closed before being reused. This becomes very useful when attempting to redirect output, as

it automatically takes care of closing the file descriptor, performing the redirection in one elegant

command.

section3

pipe 管道。不太确定管程和这个系统调用有没有关系。

• pipe - A system call that can be used for interprocess communication.

More specifically, the pipe() syscall creates two file descriptors, which the process can write() to

and read() from. Since these file descriptors are preserved across fork() calls, they can be used

to implement inter-process communication.

硬件提供的原子操作

• test and set - An atomic operation implemented in hardware. Often used to implement locks

and other synchronization primitives. In this handout, assume the following implementation.

int test_and_set(int *value) {

int result = *value;

*value = 1;

return result;

}

• compare and swap (CAS) - An atomic operation implemented in hardware. This operation

compares the contents of a memory location, ptr with some value old. If the contents are equal,

CAS will write new into ptr and return true; otherwise, it will return false.

bool compare_and_swap(int *ptr, int old, int new) {

if(*ptr != old){

return false;

} else{

*ptr = new;

return true;

}

}

Condition Variable

这里还是比较复杂的,请参考 Monitor 的实现。

Hoare 和 Mesa 是 Monitor 两个实现方案。

https://testerhome.com/articles/27920

• Condition Variable - A synchronization variable that provides serialization (ensuring that events

occur in a certain order). A condition variable is defined by:

– a lock (a condition variable + its lock are known together as a monitor)

– some boolean condition (e.g. hello < 1)

– a queue of threads waiting for the condition to be true

In order to access any CV functions OR to change the truthfulness of the condition, a thread

must/should hold the lock. Condition variables offer the following methods:

– cv wait(cv, lock) - Atomically unlocks the lock, adds the current thread to cv’s thread

queue, and puts this thread to sleep.

– cv notify(cv) - Removes one thread from cv’s queue, and puts it in the ready state.

– cv broadcast(cv) - Removes all threads from cv’s queue, and puts them all in the ready

state.

When a wait() ing thread is notified and put back in the ready state, it also re-acquires the lock

before the wait() function returns.

When a thread runs code that may potentially make the condition true, it should acquire the lock,

modify the condition however it needs to, call notify() or broadcast() on the condition’s CV, so

waiting threads can be notified, and finally release the lock.

Why do we need a lock anyway? Well, consider a race condition where thread 1 evaluates the

condition C as false, then thread 2 makes condition C true and calls cv.notify, then 1 calls

cv.wait and goes to sleep. Thread 1 might never wake up, since it went to sleep too late.

• Hoare Semantics - In a condition variable, wake a blocked thread when the condition is true and

transfer control of the CPU and ownership of the lock to that thread immediately. This is difficult

to implement in practice and generally not used despite being conceptually easier to deal with.

• Mesa Semantics - In a condition variable, wake a blocked thread when the condition is true

with no guarantee on when that thread will actually execute. (The newly woken thread simply

gets put on the ready queue and is subject to the same scheduling semantics as any other thread.)

The implications of this mean that you must check the condition with a while loop instead of an

if-statement because it is possible for the condition to change to false between the time the thread

was unblocked and the time it takes over the CPU.

section4

socket

• Socket - Sockets are an abstraction of a bidirectional network I/O queue. It embodies one side

of a communication channel, meaning that two must be required for a communication channel to

form.The two ends of the communication channel may be local to the same machine, or they may

span across different machines through the Internet. Most functions that operate on file descriptors

like read() or write() work on sockets. but certain operations like lseek() do not.

文件描述符

• file descriptors - File descriptors are an index into a file-descriptor table stored by the kernel.

The kernel creates a file-descriptor in response to an open call and associates the file-descriptor

with some abstraction of an underlying file-like object; be that an actual hardware device, or a

file-system or something else entirely. Using file descriptors, a process’s read or write calls are

routed to the correct place by the kernel. When your program starts you have 3 file descriptors.

File Descriptor File

0 stdin

1 stdout

2 stderr

调度

• Scheduler - Routine in the kernel that picks which thread to run next given a vacant CPU and

a ready queue of unblocked threads.

抢占

• Preemption - The process of interrupting a running thread to allow for the scheduler to decide

which thread runs next.

调度算法

• FIFO Scheduling - First-In-First-Out (aka First-Come-First-Serve) scheduling runs jobs as they

arrive. Turnaround time can degrade if short jobs get stuck behind long ones (convoy effect);

• round-robin Scheduling - Round-Robin scheduling runs each job in fixed-length time slices

(quanta). The scheduler preempts a job that exceeds its quantum and moves on, cycling through

the jobs. It avoids starvation and is good for short jobs, but context switching overhead can become

important depending on quanta length;

• Shortest Time Remaining First Scheduling - A scheduling algorithm where the thread that

runs is the one with the least time remaining. This is ideal for throughput but also must be

approximated in practice.

• Linux CFS - Linux scheduling algorithm designed to optimize for fairness. It gives each thread a

weighted share of some target latency and then ensures that each thread receives that much virtual

CPU time in its scheduling decisions.

• Earliest Deadline First - Scheduling algorithm used in real time systems. It attempts to meet

deadlines of threads that must be processed in real time by selecting the thread that has the closest

deadline to complete first.

• Multi-Level Feedback Queue Scheduling - MLFQS uses multiple queues with priorities, dropping CPU-bound jobs that consume their entire quanta into lower-priority queues.

section5

优先级倒置

• Priority Inversion - If a higher priority thread is blocking on a resource (a lock, as far as

you’re concerned but it could be the Disk or other I/O device in practice) that a lower priority

thread holds exclusive access to, the priorities are said to be inverted. The higher priority thread

cannot continue until the lower priority thread releases the resource. This can be amended by

implementing priority donation.

优先级捐赠

这个是对应优先级倒置的处理方案

• Priority Donation - If a thread attempts to acquire a resource (lock) that is currently being held,

it donates its effective priority to the holder of that resource. This must be done recursively until

a thread holding no locks is found, even if the current thread has a lower priority than the current

resource holder. (Think about what would happen if you didn’t do this and a third thread with

higher priority than either of the two current ones donates to the original donor.) Each thread’s

effective priority becomes the max of all donated priorities and its original priority.

死锁

• Deadlock - A case of starvation due to a cycle of waiting. Computer programs sharing the same

resource effectively prevent each other from accessing the resource, causing both programs to cease

to make progress.

银行家问题(多数的课本中叫哲学家问题)

• Banker’s Algorithm - A resource allocation and deadlock avoidance algorithm that tests for

safety by simulating the allocation for predetermined maximum possible amounts of all resources,

before deciding whether allocation should be allowed to continue.

I/O

• I/O In the context of operating systems, input/output (I/O) consists of the processes by which

the operating system receives and transmits data to connected devices.

控制器(通常外部设备会有一个控制器单元,用于和 OS 的交互)

• Controller The operating system performs the actual I/O operations by communicating with

a device controller, which contains addressable memory and registers for communicating the the

CPU, and an interface for communicating with the underlying hardware. Communication may be

done via programmed I/O, transferring data through registers, or Direct Memory Access, which

allows the controller to write directly to memory.

中断,I/O 中断是中断中比较常用的。

• Interrupt One method of notifying the operating system of a pending I/O operation is to send a

interrupt, causing an interrupt handler for that event to be run. This requires a lot of overhead,

but is suitable for handling sporadic, infrequent events.

轮询(低频外设)

• Polling Another method of notifying the operating system of a pending I/O operating is simply

to have the operating system check regularly if there are any input events. This requires less

overhead, and is suitable for regular events, such as mouse input.

I/O 空间到内存空间映射

• Memory-Mapped I/O Memory-mapped I/O (not to be confused with memory-mapped file I/O)

uses the same address bus to address both memory and I/O devices – the memory and registers of

the I/O devices are mapped to (associated with) address values. So when an address is accessed

by the CPU, it may refer to a portion of physical RAM, but it can also refer to memory of the

I/O device. Thus, the CPU instructions used to access the memory can also be used for accessing

devices

section6

虚存

• Virtual Memory - Virtual Memory is a memory management technique in which every process

operates in its own address space, under the assumption that it has the entire address space to

itself. A virtual address requires translation into a physical address to actually access the system’s

memory.

内存管理单元(硬件实现,cpu 和 cache 中间)

• Memory Management Unit - The memory management unit (MMU) is responsible for translating a process’ virtual addresses into the corresponding physical address for accessing physical

memory. It does all the calculation associating with mapping virtual address to physical addresses,

and then populates the address translation structures.

地址转化框架

• Address Translation Structures - There are two kinds you learned about in lecture: segmentation and page tables. Segments are linearly addressed chunks of memory that typically contain

logically-related information, such as program code, data, stack of a single process. They are of

the form (s,i) where memory addresses must be within an offset of i from base segment s. A page

table is the data structure used by a virtual memory system in a computer operating system to

store the mapping between virtual addresses and physical addresses. Virtual addresses are used

by the accessing process, while physical addresses are used by the hardware or more specifically to

the RAM.

地址翻译缓存

• Translation Lookaside Buffer (TLB) - A translation lookaside buffer (TLB) is a cache that

memory management hardware uses to improve virtual address translation speed. It stores virtual

address to physical address mappings, so that the MMU can store recently used address mappings

instead of having to retrieve them mutliple times through page table accesses.

section7

缓存

• Cache - A repository for copies that can be accessed more quickly than the original. Caches

are good when the frequent case is frequent enough and the infrequent case is not too expensive.

Caching ensures locality in two ways: temporal (time), keeping recently accessed data items ’saved’,

and spatial (space), since we often bring in contiguous blocks of data to the cache upon a cache

miss.

平均内存访问时间

• AMAT - Average Memory Access Time: a key measure for cache performance. The formula is

(Hit Rate x Hit Time) + (Miss Rate x Miss Time) where Hit Rate + Miss Rate = 1.

强制失效(第一次读取,增加预读)

• Compulsory Miss - The miss that occurs on the first reference to a block. This also happens

with a ’cold’ cache or a process migration. There’s essentially nothing that you can do about this

type of miss, but over the course of time, compulsory misses become insignificant compared to all

the other memory accesses that occur.

容量失效(加大内存,减少占用)

• Capacity Miss - The miss that occurs when the cache can’t contain all the blocks that the

program accesses. One solution for capacity misses is increasing the cache size.

冲突失效(N-ways)

• Conflict Miss - The miss that occurs when multiple memory locations are mapped to the same

cache location (i.e a collision occurs). In order to prevent conflict misses, you should either increase

the cache size or increase the associativity of the cache. These technically do not exist in virtual

memory, since we use fully-associative caches.

同步失效 (mesi 协议)

• Coherence Miss - Coherence misses are caused by external processors or I/O devices that update

what’s in memory (i.e invalidates the previously cached data).

页表属性

• Tag - Bits used to identify the block - should match the block’s address. If no candidates match,

cache reports a cache miss.

• Index - Bits used to find where to look up candidates in the cache. We must make sure the tag

matches before reporting a cache hit.

• Offset - Bits used for data selection (the byte offset in the block). These are generally the lowestorder bits.

直连 cache

• Direct Mapped Cache - For a 2N byte cache, the uppermost (32 - N) bits are the cache tag;

the lowest M bits are the byte select (offset) bits where the block size is 2M. In a direct mapped

cache, there is only one entry in the cache that could possibly have a matching block.

N-way cache(N-way LRU)

• N-way Set Associative Cache - N directly mapped caches operate in parallel. The index is

used to select a set from the cache, then N tags are checked against the input tag in parallel.

Essentially, within each set there are N candidate cache blocks to be checked. The number of sets

is X / N where X is the number of blocks held in the cache.

section8

页面请求

• Demand Paging The process where the operating system only stores pages that are ” in demand”

in the main memory and stores the rest in persistent storage (disk). Accesses to pages not currently

in memory page fault and the page fault handler will retrieve the request page from disk (paged

in). When main memory is full, then as new pages are paged in old pages must be paged out

through a process called eviction. Many cache eviction algorithms like least recently used can be

applied to demand paging, the main memory is acting as the cache for pages which all start on

disk.

工作集

工作集和驻留集看这篇

https://blog.csdn.net/qq_28602957/article/details/53821061?utm_medium=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-2.control&depth_1-utm_source=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-2.control

• Working Set The subset of the address space that a process uses as it executes. Generally we

can say that as the cache hit rate increases, more of the working set is being added to the cache.

驻留集

• Resident Set Size The portion of memory occupied by a process that is held in main memory

(RAM). The rest has been paged out onto disk through demand paging.

内存颠簸(不断的做页面请求)

• Thrashing Phenomenon that occurs when a computer’s virtual memory subsystem is constantly

paging (exchanging data in memory for data on disk). This can lead to significant application

slowdown.

页面替换算法(LRU 不能用,开销太大)

• Clock Algorithm: An approximation of LRU. Main idea: replace an old page, not the oldest

page. On a page fault, check the page currently pointed to by the ’clock hand. Checks a use bit

which indicates whether a page has been used recently; clears it if it is set and advances the clock

hand. Otherwise, if the use bit is 0, selects this candidate for replacement.

Other bits used for Clock: ” modified”/” dirty” indicates whether page must be written back to

disk upon pageout; ” valid” indicates whether the program is allowed to reference this page; ” readonly”/” writable” indicates whether the program is allowed to modify this page.

• Nth Chance Algorithm: An approximation of LRU. A version of Clock Algorithm where each

page gets N chances before being selected for replacement. The clock hand must sweep by N

times without the page being used before the page is replaced. For a large N, this is a very good

approximation of LRU.

这个很好用,高速、低速相结合。

• Second-Chance List Algorithm: An approximation of LRU. Divides pages into two - an active

list and a second-chance list. The active list uses a replacement policy of FIFO, while the secondchance list uses a replacement policy of LRU. Not required reading, but if you’re interested in

the details, this algorithm is covered in detail in this paper: https://users.soe.ucsc.edu/

~sbrandt/221/Papers/Memory/levy-computer82.pdf. The version presented in lecture and for

the purposes of this course includes some significant simplifications.

反向页表

• Inverted Page Table - The inverted page table scheme uses a page table that contains an entry

for each phiscial frame, not for each logical page. This ensures that the table occupies a fixed

fraction of memory. The size is proportional to physical memory, not the virtual address space.

The inverted page table is a global structure – there is only one in the entire system. It stores

reverse mappings for all processes. Each entry in the inverted table contains has a tag containing

the task id and the virtual address for each page. These mappings are usually stored in associative

memory (remember fully associative caches from 61C?). Associatively addressed memory compares

input search data (tag) against a table of stored data, and returns the address of matching data.

They can also use actual hash maps.

响应时间

• Response Time Response time measures the time between a requested I/O operating and its

completion, and is an important metric for determining the performance of an I/O device.

吞吐量

• Throughput Another important metric is throughput, which measures the rate at which operations are performed over time.

异步 I/O

• Asynchronous I/O For I/O operations, we can have the requesting process sleep until the operation is complete, or have the call return immediately and have the process continue execution

and later notify the process when the operation is complete.

section9

FAT(全局表,一一对应)

• FAT - In FAT, the disk space is still viewed as an array. The very first field of the disk is the boot

sector, which contains essential information to boot the computer. A super block, which is fixed

sized and contains the metadata of the file system, sits just after the boot sector. It is immediately

followed by a file allocation table (FAT). The last section of the disk space is the data section,

consisting of small blocks with size of 4 KiB.

In FAT, a file is viewed as a linked list of data blocks. Instead of having a ” next block pointer”

in each data block to make up the linked list, FAT stores these pointers in the entries of the file

allocation table, so that the data blocks can contain 100% data. There is a 1-to-1 correspondence

between FAT entries and data blocks. Each FAT entry stores a data block index. Their meaning

is interpreted as:

If N > 0, N is the index of next block

If N = 0, it means that this is the end of a file

If N = −1, it means this block is free

Unix 文件系统

• Unix File System (Fast File System) - The Unix File System is a file system used by many

Unix and Unix-like operating systems. Many modern operating systems use file systems that are

based off of the Unix File System.

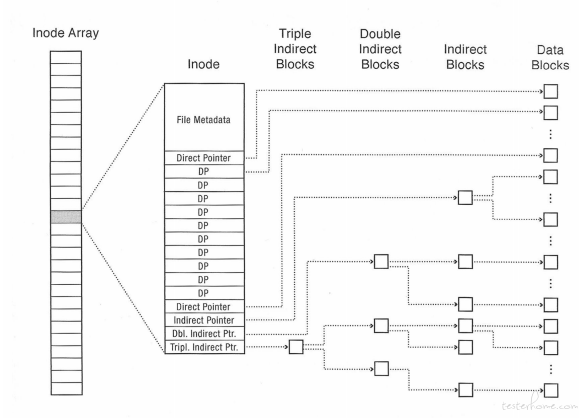

inode(Unix 文件节点)

• inode - An inode is the data structure that describes the metadata of a file or directory. Each

inode contains several metadata fields, including the owner, file size, modification time, file mode,

and reference count. Each inode also contains several data block pointers, which help the file

system locate the file’s data blocks.

Each inode typically has 12 direct block pointers, 1 singly indirect block pointer, 1 doubly indirect

block pointer, and 1 triply indirect block pointer. Every direct block pointer directly points to a

data block. The singly indirect block pointer points to a block of pointers, each of which points

to a data block. The doubly indirect block pointer contains another level of indirection, and the

triply indirect block pointer contains yet another level of indirection.

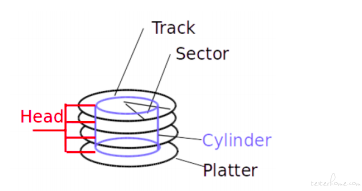

磁盘

• Hard Disk Drive (HDD) - A storage device that stores data on magnetic disks. Each disk

consists of multiple platters of data. Each platter includes multiple concentric tracks that are

further divided into sectors. Data is accessed (for reading or writing) one sector at a time. The

head of the disk can transfer data from a sector when positioned over it.

因为成本问题,Head 是一起动的。

磁盘寻址参数

• Seek Time - The time it takes for an HDD to reposition its disk head over the desired track.

• Rotational Latency - The time it takes for the desired sector to rotate under the disk head.

• Transfer Rate - The rate at which data is transferred under the disk head.

section10

checksum

• Checksum - A mathematical function which maps a (typically large) input to a fixed size output.

Checksums are meant to detect changes to the underlying data and should change if changes

occur to the underlying data. Common checksum algorithms include CRC32, MD5, SHA-1, and

SHA-256.

备份

• Replication - Replication or duplication is a common technique for preserving data in the face of

disk failure or corruption. If a disk fails, data can be read from the replica. If a sector is corrupted,

it will be detected in the checksum. The data can then be read from another replica.

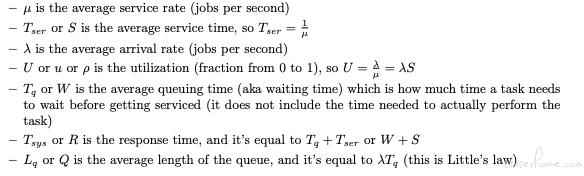

队列理论

• Queuing Theory Here are some useful symbols: (both the symbols used in lecture and in the

book are listed)

看这个例子吧:

section11

容错

• Fault Tolerance The ability to preserve certain properties of a system in the face of failure of

a component, machine, or data center. Typical properties include consistencies, availability, and

persistence.

交易(可以简单理解为一个原子操作。)

• Transaction - A transaction is a unit of work within a database management system. Each

transaction is treated as an indivisible unit which executes independently from other transactions.

The ACID properties are usually used to describe reliable transactions.

ACID(事务具有 4 个特征,分别是原子性、一致性、隔离性和持久性,简称事务的 ACID 特性)

• ACID - An acronym standing for the four key properties of a reliable transaction.

– Atomicity - The transaction must either occur in its entirety, or not at all.

– Consistency - Transactions must take data from one consistent state to another, and cannot

compromise data integrity or leave data in an intermediate state.

– Isolation - Concurent transactions should not interfere with each other; it should appear as

if all transactions are serialized.

– Durability - The effect of a committed transaction should persist despite crashes.

幂等(s=s*s)

• Idempotent - An idempotent operation can be repeated without an effect after the first iteration.

Log

• Log - An append only, sequential data structure.

Checkpoint(检查点,区别于 Savepoint)

• Checkpoint - Aka a snapshot. An operation which involves marshaling the system’s state. A

checkpoint should encapsulate all information about the state of the system without looking at

previous updates.

Write Ahead Logging (WAL)

写盘之间先记录 log,后面接 commit 统一提交。

• Write Ahead Logging (WAL) - A common design pattern for fault tolerance involves writing

updates to a system’s state to a log, followed by a commit message. When the system is started

it loads an initial state (or snapshot), then applies the updates in the log which are followed by a

commit message.

串行化

• Serializable - A property of transactions which requires that there exists an order in which

multiple transactions can be run sequentially to produce the same result. Serializability implies

isolation.

ARIES 算法

可以参考这篇:

https://blog.csdn.net/weixin_34123613/article/details/92562478

• ARIES - A logging/recovery algorithm which stands for: Algorithms for Recovery and Isolation

Exploiting Semantics. ARIES is characterized by a 3 step algorithm: Analysis, Redo, then Undo.

Upon recovery from failure, ARIES guarantees a system will remain in a consistent state.

日志文件系统

• Logging File System - A logging file system (or journaling file system) is a file system in which

all updates are performed via a transaction log (“journal”) to ensure consistency in case the system

crashes or loses power. Each file system transaction is written to an append-only redo log. Then,

the transaction can be committed to disk. In the event of a crash, a file system recovery program

can scan the journal and re-apply any transactions that may not have completed successfully. Each

transaction must be idempotent, so the recovery program can safely re-apply them.

Metadata Logging

只保存 inode 中的 Metadata 修改记录,数据内容不记录。

• Metadata Logging - A technique in which only metadata is written to the log rather than writing

the entire update to the log. Modern file systems use this technique to avoid duplicating all file

system updates.

EXT4(Linux 文件系统)

• EXT4 - A modern file system primarily used with Linux. It features an FFS style inode structure

and metadata journaling.

日志结构文件系统

• Log Structured File System - A file system backed entirely by a log.