做这个事情只为练手用,不会对官网造成什么压力

服务端:springboot

数据层:mybatis + redis

网页解析:Jsoup

服务器:腾讯云

抓取文本入库和主要代码,入库的时候把数据写入 mysql 和 redis,主要是因为文本数据量比较大,查询太慢读取数据使用内存

public static void body(String url, TestHome testHome) {

try {

String hosts = "https://testerhome.com";

Document document = Jsoup.connect(hosts + url).get();

Elements elements = document.select("div.panel-body");

testHome.setText(elements.text()); //这部分二期需求,对标题、文本、图片等进行区分

} catch (IOException e) {

e.printStackTrace();

}

}

public static List<TestHome> home(String url) {

List<TestHome> lists = new ArrayList<>();

Jedis jedis = new Jedis("*。*。*", 6379);

try {

Document document = Jsoup.connect(url).get();

Elements titles = document.select("div.topics").select("div.topic").select("div.infos");

for (Element element : titles) {

Elements links = element.getElementsByTag("a");

if (links.hasAttr("title")) {

TestHome testHome = new TestHome();

String title = links.attr("title");

String href = links.attr("href");

testHome.setTitle(title.trim());

testHome.setUrl(href);

body(href, testHome);

jedis.set(href, testHome.getText());

lists.add(testHome);

}

}

} catch (IOException e) {

e.printStackTrace();

}

return lists;

}

mybatis 入库 xml

<mapper namespace="com.ming.data.dao.TestHomeDao">

<resultMap id="BaseResultMap" type="com.ming.data.entity.TestHome">

</resultMap>

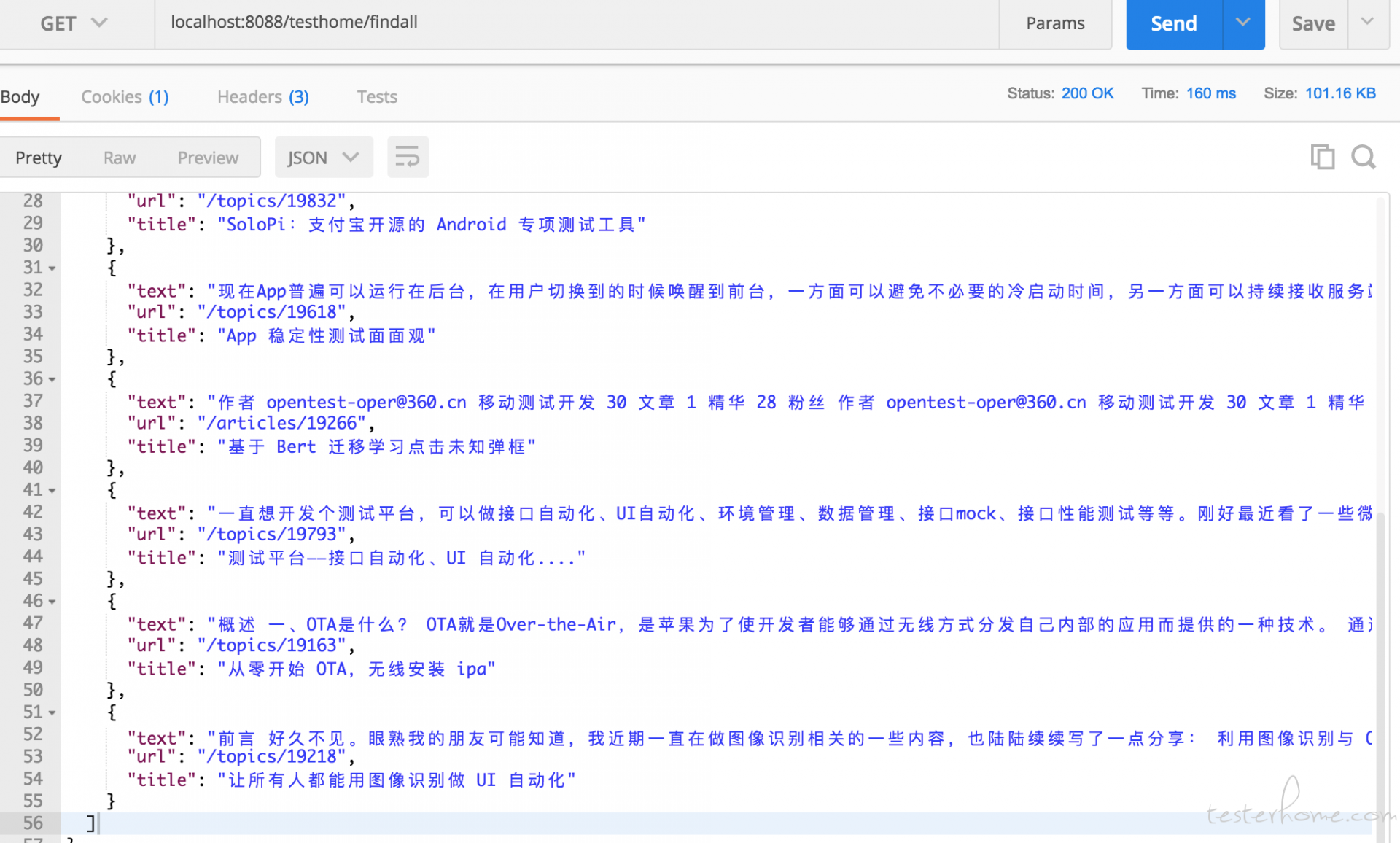

<select id="findAll" resultType="com.ming.data.entity.TestHome">

select title,text,url

from testhome limit 10

</select>

<insert id="save" parameterType="com.ming.data.entity.TestHome">

insert into

testhome(title,`text`,url)

values(#{title}, #{text}, #{url})

</insert>

<select id="findByUrl" resultType="com.ming.data.entity.TestHome">

select *

from testhome

where url=#{url}

</select>

</mapper>

controllor 层爬取入口

@RequestMapping(value = "/crawling")

public ApiResult crawling() {

String url = "https://testerhome.com/topics/popular?page=";

for (int i = 2; i < 59; i++) {

System.out.println(url + i);

List<TestHome> lists = CrawlingTestHome.home(url + i);

if (lists.size() != 0 && lists != null) {

testHomeService.save(lists);

}

}

return ApiResult.success("");

}

爬取最新一页,如果在库里面没有就入库,有就丢掉,定时任务只爬取最新一页不会对服务器造成压力

public ApiResult crawlingNew() {

String url = "https://testerhome.com/topics/popular";

List<TestHome> lists = CrawlingTestHome.home(url);

if (lists.size() != 0 && lists != null) {

for(int i=0;i<lists.size();i++){

String urls=lists.get(i).getUrl();

List<TestHome> datas=testHomeService.findByUrl(urls);

if(datas.size()==0){

testHomeService.saveOne(lists.get(i));

}

}

}

由于爬取的数据和数据格式多种多样,入库遇到了很多问题

1.数据格式不一致比如表情等无法入库

-org.springframework.jdbc.BadSqlGrammarException:

### Error updating database. Cause: com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException: You have an error in your SQL syntax; check the manual that corresponds to your MySQL server version for the right syntax to use near 'insert into

testhome(title,`text`,url)

values('Tcloud ????-???????', '??? Py' at line 2

### The error may involve com.ming.data.dao.TestHomeDao.save-Inline

### The error occurred while setting parameters

### SQL: set names utf8mb4; insert into testhome(title,`text`,url) values(?, ?, ?)

### Cause:

解决方法:

a.修改表和库、字段的编码格式修改为 utf8mb4

b.修改远程 mysql 数据库/etc/my.cnf

[mysql]

default-character-set=utf8mb4

[client]

default-character-set=utf8mb4

[mysqld]

character-set-server=utf8mb4

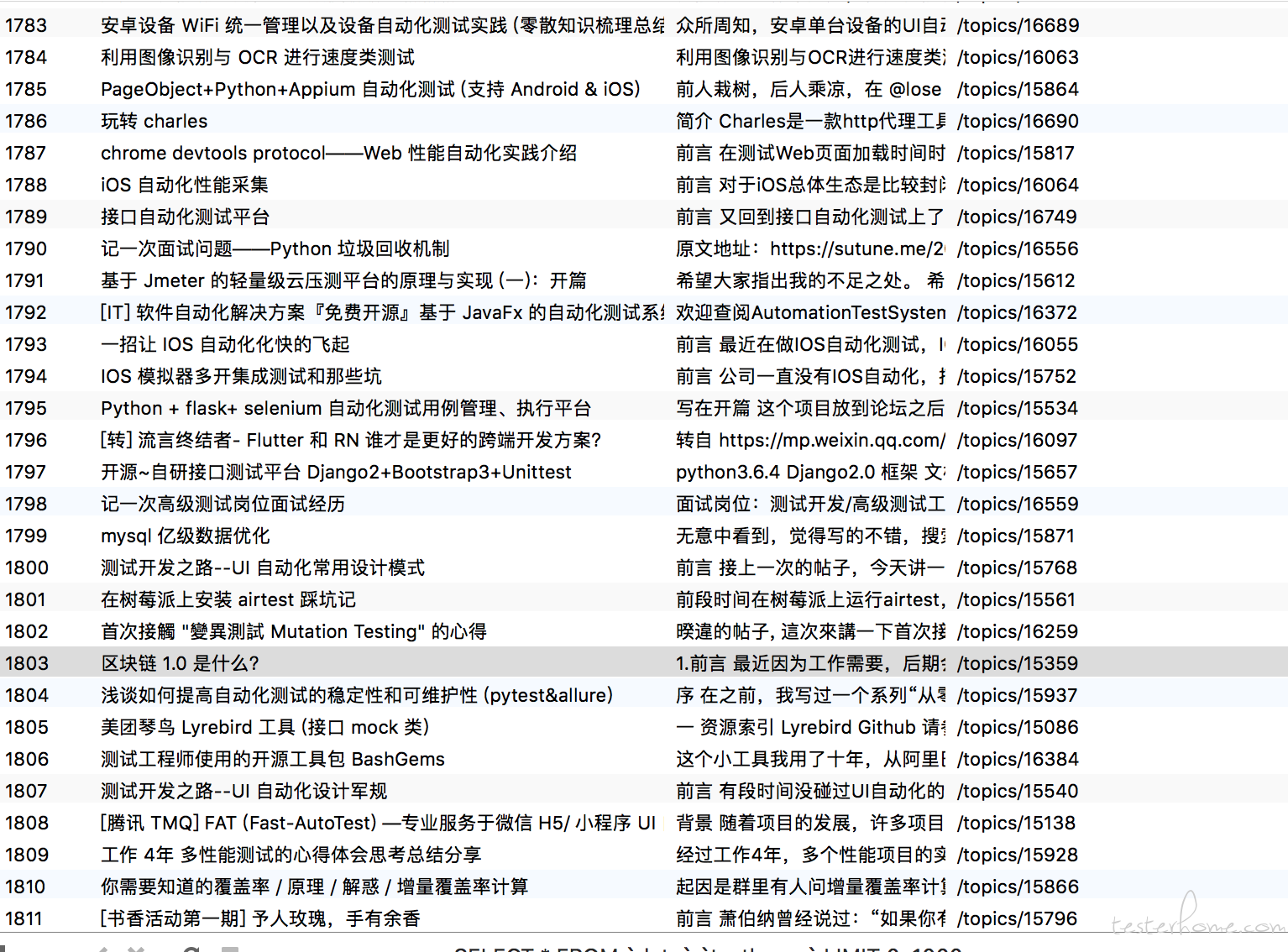

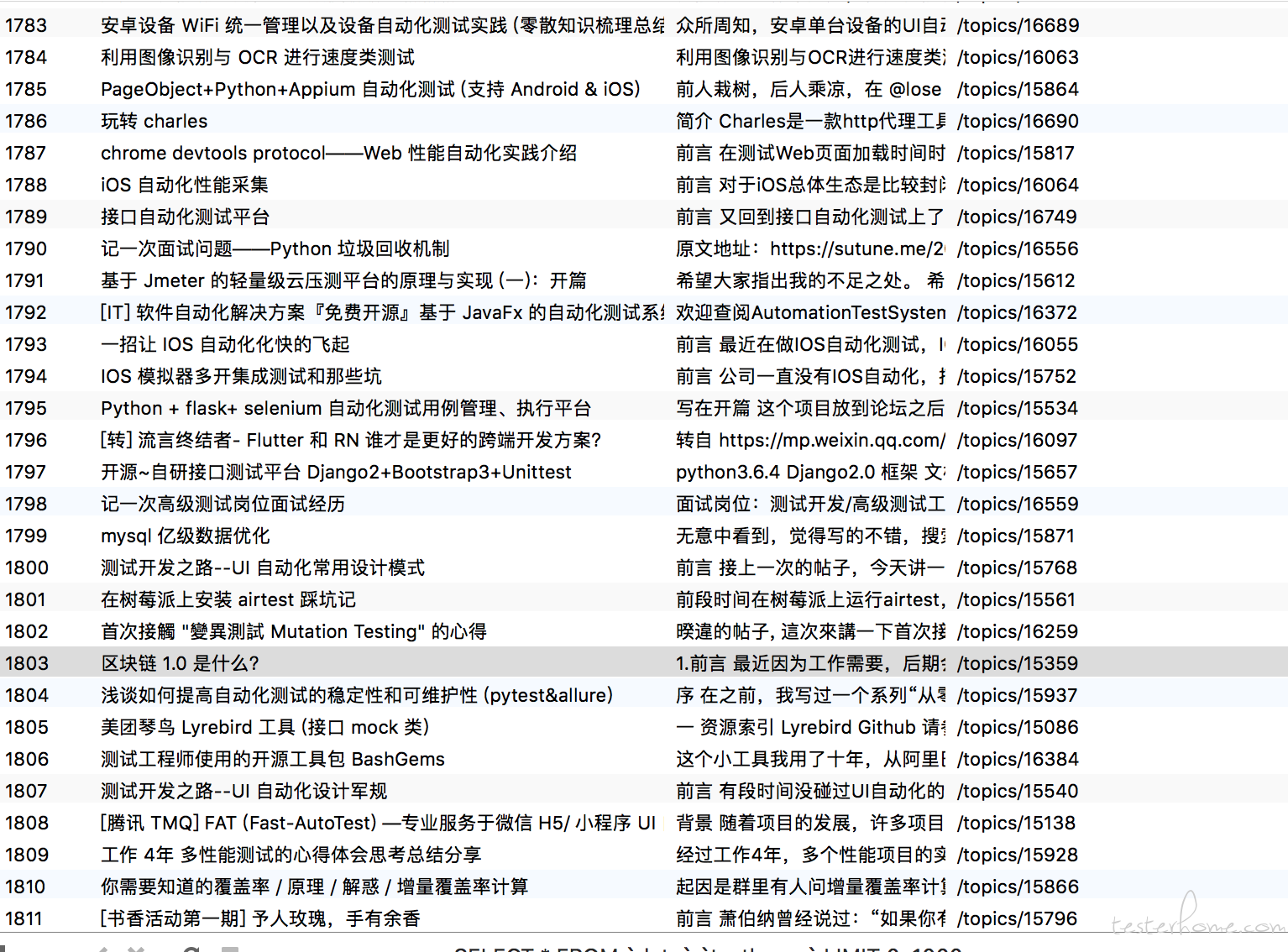

2.查询数据太慢

首先自己的服务器带宽只要 2M,I/O 读写也慢,虽然只爬取了 1000 条数据,但是每列都要几百 k 到几 M,所有下载下来比较慢

解决办法:

a. mybatis 引入分页查找

b. 引入 redis,对于查询单条记录通过爬取的 url 作为 key 进行入库并查询

最后终于完成